Integrated Algorithmic Impact Assessment Case Study

June 2023 - 4 month Sprint

Solo User Researcher

Background

Algorithms are increasingly used in criminal justice systems worldwide, frequently developed by the business sector and applied by law enforcement agencies. Potential advantages for law enforcement include enhanced decision-making, efficiency, and uniformity. Criminals are often given harsher sentences based on forecasts of how dangerous they will be in the future. For people on probation or parole who are supposed to be watched in the community, these kinds of forecasts affect the conditions under which they are to be supervised. This kind of data analysis is based on something called "recidivism," which means being charged or convicted of a new crime, no matter how small.

The term "Algorithmic Impact Assessment" (AIA) refers to various processes and documents used to assess the development and impact of algorithmic systems. It's part of a growing set of tools for holding these systems accountable, like audits, datasheets, labels, and model cards. AIAs aim to document these systems to help reduce potential harm to individuals and vulnerable groups. While some AIAs have good ideas in their own areas, none tie together all the varied concerns and fields in a way that would truly benefit the UK’s Criminal Justice System. Thus it is necessary to draw on the strengths of each of these individually and integrate them into a multidisciplinary AIA aimed at providing comprehensive and rigorous guidelines and suggestions for the use of algorithmic decision-making tools.

Research Goal

The research goal was to develop and recommend a conceptual model for Algorithmic Impact Assessment (AIA) tailored to the UK’s criminal justice system while addressing potential misuse of AI tools.

Research Questions

RQ1) How can a conceptual model for Algorithmic Impact Assessment (AIA) be adapted for the criminal justice system, integrating existing AIAs and incorporating perspectives from both AI tool users (e.g., police officers) and stakeholders (e.g., computer scientists) through participatory design research?

RQ2) How to mitigate potential misuse of these AI tools?

Research Impact

The research goal was to develop and recommend a conceptual model for Algorithmic Impact Assessment (AIA) tailored to the UK’s criminal justice system while addressing potential misuse of AI tools.

Research Insights

-

Policymakers should ensure that these AIA assessments are asking thoughtful questions from public sectors and private organisations (i.e Police Forces using AI tools, developers creating AI models) as well as demand thoughtful answers in return (e.g. “For what purpose are you seeking access to these sensitive demographic information? Please elaborate.”). This is essential in order to not reinforce existing biases in these AI tools.

-

Users such as Police officers need training before using these AI tools so they don’t overtrust or undertrust the algorithm. If users are able to understand the algorithmic decision-making then, then they will be able to document their own decision-making process accordingly. Which in return will survive public scrutiny and restore the public's faith in Police decision-making.

Business Values

The business value of this research lies in enhancing the ethical and effective deployment of AI tools within the criminal justice system. By developing a tailored conceptual model for Algorithmic Impact Assessment (AIA) and mitigating potential misuse of AI tools, businesses operating within the criminal justice sector can promote fairness, transparency, and accountability, thereby fostering trust among stakeholders and communities. Additionally, this research may help organisations avoid legal and reputational risks associated with unethical or biased AI implementations, ultimately contributing to long-term sustainability and responsible innovation in the industry.

Actionable Recommendations

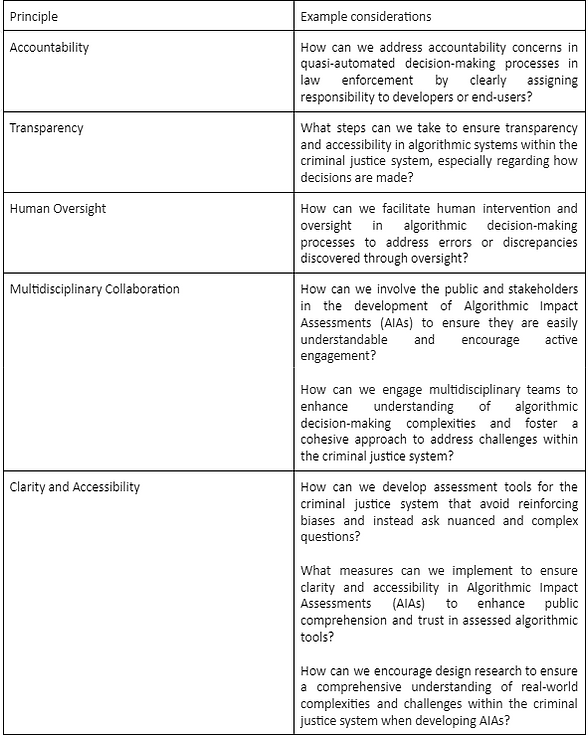

Table 1: Key design choices addressing the research goal

Research Roadmap

Research Process Breakdown

-

Generative research to identify research gaps (peer-reviewed publications, white papers, published AIA from different nations i.e. Canada, UK).

-

Developed the research proposal

-

Identified internal, external stakeholder and user groups (i.e. North Yorkshire Police, AI researchers, UX Designers) and created their respective personas

-

Recruited participants (N=8) from stakeholder and user groups through purposive and snowball sampling

-

Managed stakeholder relationships to schedule participatory design workshops, both in person and remotely using Miro

-

Facilitated workshops to encourage multidisciplinary stakeholder co-participation, employing techniques such as affinity mapping and dot voting exercises

-

Data coding and synthesis through Reflexive Thematic Analysis using Dovetail and Excel

-

Presented research insights and actionable recommendations to stakeholders based on data analysis

-

Wrote the final report summarising findings and recommendations

Choosing the Research Plan

I had a significant time constraint - only 4 months to complete the study including the write up. Usually I would conduct primary research to collect data from the users e.g. running usability testing sessions on the AI tools being used by North Yorkshire Police. But in this case, I used external data on market trends, a mix of competitive benchmarking, and ran several workshops with current users and stakeholders’.

Results from market trend analysis and competitive benchmarking

-

No standardised guidelines for AI tools in the criminal justice system, anywhere.

-

Insufficient model literacy among police officers who utilise these tools.

-

Inability of developers to control which police forces use their tools and for what purposes, leading to potential misuse.

-

Developers unable to enforce whether police officers use the tools as decision-making or decision-supporting aids.

-

Balancing the accessibility of tools for developers with protecting intellectual property rights.

-

Limited awareness among the general public regarding the use of AI tools for profiling or labelling individuals, hindering their ability to raise concerns.

-

General public distrust towards the police and their utilisation of AI tools.

Users and stakeholders

There was a noticeable research gap in including input from the people who were most affected by this research - the members of the general public. So, I recruited a few of them first.

I recruited the AI researchers through the University of York mailing list. After that, I got in touch with the Chief of North Yorkshire Police, shared my project proposal, and asked if any of their team were interested. I managed to recruit a few interested members. The stakeholder groups now included members from the general public, Police and AI researchers/developers, with the Police officers being both stakeholders and users of the AI tools.

Since the research goal requires us to consider design aspects, I recruited the UX designers for their expertise in design thinking.

Strategy

Scheduling the Workshops

Challenges arose as police officers expressed their lack of technological proficiency, and members of the public faced difficulty attending physical workshops. So the first workshop was conducted virtually through Google Meet and Miro.

The absence of stakeholders in the initial workshop allowed participants to express their genuine sentiments about the research. They emphasised the importance of maultidisciplinary engagement and conversation among stakeholders, recognising their diverse needs. These insights further reinforced findings from secondary research and validated the decision to conduct a second workshop.

Participant Sample

Table 2: Workshop 01 participants

Table 3: Workshop 02 participants

Workshop Activities

The research aimed to co-design solutions based on participant feedback, so I favoured qualitative methods. Two participatory workshops were held to gather insights from stakeholders, users and the general public on AIAs and algorithmic systems in the UK's criminal justice system. Participatory design research was chosen for its collaborative nature, breaking barriers between designers, researchers, stakeholders and users, promoting creativity and trust. Through this I gained a deep understanding of stakeholder values and interdisciplinary solutions to algorithmic system challenges. My choice of approach also enabled me to fill gaps in public participation and multidisciplinary engagement in AIA design, addressing crucial aspects of algorithmic system usage in the criminal justice system.

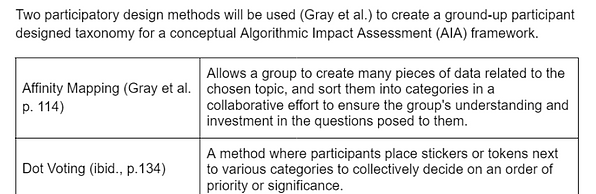

I decided on two “gamestorming” activities for both workshops: Affinity Mapping and Dot Voting.

Figure 1: Gamestorming activities

Designing the Solution

Gathering data

The study gathered data primarily from workshop observations, including audio-video recordings and artefacts created through affinity mapping. In Workshop 01, participants used Miro's tools and sticky notes for note-taking and voting. Workshop 02 provided various materials like sticky notes and markers. These artefacts contribute to partially addressing Research Question 2.

Figure 2: Artefact created by Workshop 01 participants in Miro

Figure 3: Artefacts created by Workshop 02 participants

Data Synthesis

The response to RQ1, was derived from the co-designed artefacts resulting from participatory design research, specifically through activities like Affinity Mapping and Dot Voting. In addressing RQ2, I used affinity mapping to encourage participants to collaboratively organise their thoughts and insights into distinct categories, promoting the structured exploration of complex sociological issues. In doing so, I facilitated the clustering of related concepts, making it easier to identify overarching themes and patterns across stakeholder perspectives. Additionally, my use of dot voting provided a mechanism for participants to prioritise and emphasise concepts or categories that resonated most with them. My approach ensured that the collective wisdom of the participants guided the identification of critical concerns, offering a clear and democratic means of highlighting the most pressing issues within the realm of Algorithmic Impact Assessments and their application in the Criminal Justice System in the UK. Using these techniques I fostered engagement, consensus building, and the generation of valuable insights into the multifaceted challenges at the intersection of AI technology and human values.

In the realm of qualitative research, the choice of a methodological approach holds significant weight, as it shapes the entire investigative process and influences the quality of insights gained. Given the multifaceted nature of this study and the need to unravel nuanced perspectives from diverse stakeholders, I chose Reflexive Thematic Analysis (RTA) as the most fitting choice. RTA not only aligns with the qualitative tradition of uncovering underlying themes and patterns in textual data but also stands out for its emphasis on researcher reflexivity. This aligned seamlessly with the nature of my research, which requires a critical examination of HCI-centred concepts and necessitates the constant interrogation of my own subjectivity, as a Computer Science graduate with heavy emphasis on AI, in shaping the creation of the themes. It means certain biases were inevitable and it’s important to acknowledge those.

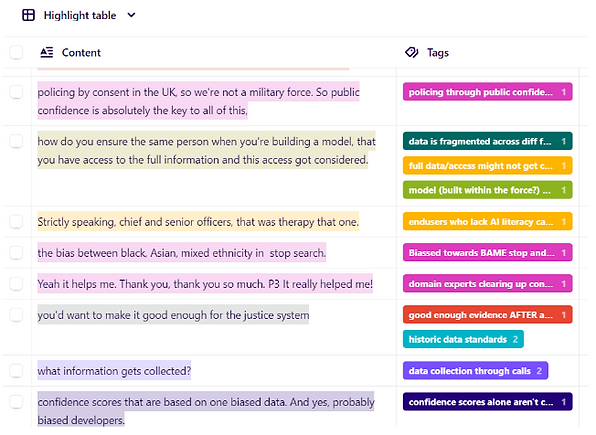

The transcribing, coding and analysing was done on Dovetail.

Figure 4: Dovetail Insights Canvas

Figure 5: Dovetail Insights/Tag Table

Conclusion

What I would do differently

To enhance the multidisciplinary approach, future work will involve participants from various areas of the criminal justice system, including legal experts and policy researchers, through thorough and in-depth one-on-one interviews. One of the limitations of the research is that it focused on North Yorkshire, which may not be representative of the broader UK context, and highlights the importance of recruiting participants from different regions in the future. Additionally, to improve future sessions, reading materials will be provided in advance to general public participants for better insight into algorithmic tools.